By Franc Romanowski

Correspondent

The Office of Title IX and Sexual Misconduct has proposed new updates to the sexual harassment policy, adding material relating to artificial intelligence, according to a Dec. 3 email from Chelsea Jacoby, the office’s director.

The new rule, which is under review by the Committee on Student and Campus Community, will prohibit the creation or sharing of artificially generated materials of individuals engaging in explicit activities, according to the email. Specifically, the rule looks to target what are known as deepfakes, or digitally altered media to spread false information, in an attempt to meet the rapid advancements and dangers posed by AI.

“The creation or distribution of deepfakes poses serious risks, including reputational harm, privacy violations, and significant emotional distress, and can have real legal and disciplinary consequences,” Jacoby wrote in the email. “The College does not condone or tolerate the use of deepfake technology for these malicious means, and any such behavior will be addressed promptly and appropriately (once this updated policy is approved).”

The proposed changes come after the College gave the campus community access to three AI products: Google Gemini, Microsoft Copilot and Google Notebook LM. The campus-wide email that announced the access also came with a reminder from the College’s Vice President for Operations and Chief Information Officer Sharon Blanton, as well as other administrative staff of the College, to explore the technology mindfully.

“As with all technologies, these tools should be used responsibly,” the Oct. 2 email read in part. “Students should be mindful of course policies and instructor expectations surrounding the use of any AI tools,” the officials added.

Already, students across the United States have become victims of explicit and intimate deepfakes, including in the surrounding area. In one of the most recent incidents, students at Radnor High School in Pennsylvania were subjects of an “inappropriate” deepfake video, according to a report from Elizabeth Worthington of 6abc Philadelphia on Dec. 9. The Radnor Police Department is calling the investigation “active,” according to Worthington's reporting.

Women are the most at risk of becoming victims, as they are among the largest targets of deepfake technology. 99% of deepfake videos on the internet are targeted towards women, largely of pornographic content.

“We need to kind of reckon with this new environment and the fact that the internet has opened up so many of these harms that are disproportionately targeting women and marginalized communities,” said Nina Jankowicz, founder of the American Sunlight Project.

The American Sunlight Project is an organization whose mission is to fight disinformation so Americans “have access to trustworthy sources to inform the choices they make in their daily lives,” according to their website.

As a result, more women are finding themselves taking more precautions with their online activity.

“The full impact of deepfakes on society is still coming into focus, but research already shows that 41 percent of women between the ages of 18 and 29 self-censor to avoid online harassment,” wrote Barbara Rodriguez and Jasmine Mithani for The 19th News.

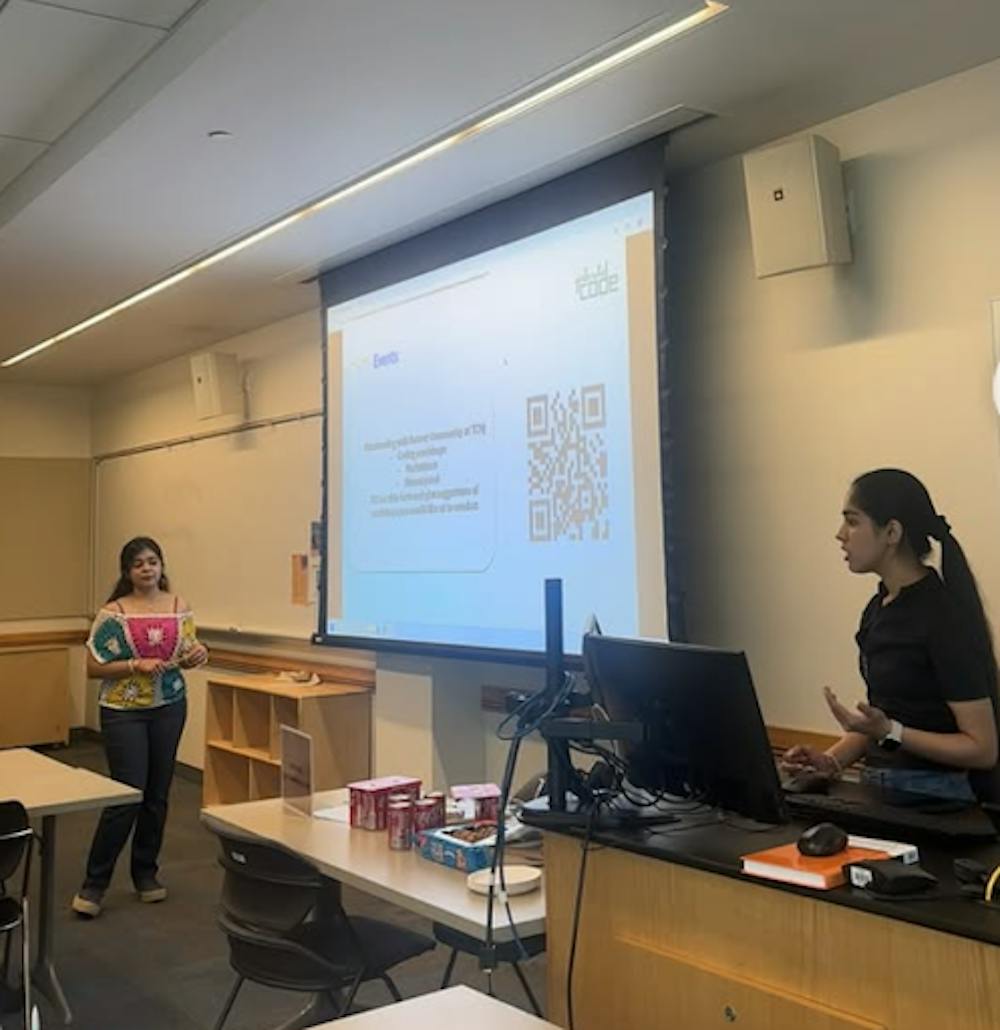

The lack of diversity within the field of computer science is the main reason Lakshita Kapoor, a sophomore computer science major and co-president of the College’s chapter of Girls Who Code, believes this technology is ripe for targeting women.

“...I feel like if we had a diverse group of people, I think that it wouldn’t be an issue,” she said.

According to the international Girls Who Code website, only 24% of computer scientists are women, down from 37% in 1995. It’s one of the reasons why Kapoor and the other TCNJ Girls Who Code co-president, Krittika Verma, also a sophomore computer science major, have joined the club.

In the meantime, the U.S. is trying to take steps to combat the rise in harmful deepfakes, especially those targeting women. Earlier this year, the Take It Down Act was signed into law, which “criminalizes the nonconsensual publication of intimate images, including ‘digital forgeries’ (i.e., deepfakes), in certain circumstances,” according to an article by Victoria Killion on congress.gov.

“It also requires certain websites and online or mobile applications, identified as ‘covered platforms,’ to implement a ‘notice-and-removal’ process to remove such images at the depicted individual’s request.”

Verma believes the law will make women safer “to an extent.” Kapoor thinks the law will not completely discourage everyone from using the technology for malicious purposes, as no law has.

Until the laws and policies surrounding deepfakes are amended and can better protect everyone, Verma and Kapoor believe education about AI is key to keeping everyone aware and safe. They believe students at the College should take the AI courses the computer science department offers.

“Specifically, I think we ought to be educated in the way we’re using it because I feel like some people don’t even know and they’re using it,” Verma said, adding “that’s where the threats come.”

Kapoor went further, recognizing that the courses may have prerequisites or students may not have the time to take a course. She wants the department to host weekly “voluntary workshops” where anyone can come learn about the technology.

More information on how the College deals with harmful AI usage can be found in the Student Code of Conduct policy. Additionally, victims of illicit deepfakes or sexual misconduct can find information and resources through the College’s Title IX website.